to infinity and beyond

Saturday, February 3rd, 2007The elevator in the math building at TU Berlin.

randformblog on math, physics, art, and design |

The elevator in the math building at TU Berlin.

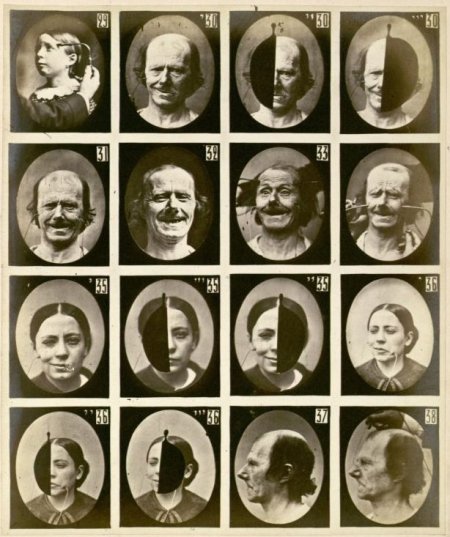

Mécanisme de la Physionomie Humaine by Guillaume Duchenne from wikipedia

Mécanisme de la Physionomie Humaine by Guillaume Duchenne from wikipedia

The face of a human (lets include the ears) is the part of a human body which is usually adressed first as an interface to the human mind and body behind it. And most often it stays the main interface to be used by other humans (and animals). After a first contact people may shake hands a.s.o. but still the face is usually the starting point for facing each other and together with subtle gestures it can give way to a very fast judgements about the personality of people.

So it is no wonder that a portrait of a person almost always includes the face. Faces usually move and the movement is very important in the perception of a face. However in a portrait painting or a portrait fotograph there is no movement and – still – portraits describe the person behind the face – at least to a certain extend. It is also a wellknown rumour (I couldnt find a study on it) that a drawing reflects the painter to a certain extend, like e.g. fat artists apparently tend to draw persons more solid then thin artists a.s.o.

So it is no wonder that people try to find laws, for e.g. when a (still) face looks attracting to others and when not. Facial expressions (see above image) play a significant role (see also this old randform post). But also cultural things etc. are important. But still – if we assume to have eliminated all these factors as best as possible (by e.g. comparing bold black and white faces of the same age group looking emotionless) – then is there still a link between the appearance of a face and the interpretation of the human character behind the face? How stable is this interpretation, like e.g. when the face was distorted by violence or an accident? How much does the physical distortion parallel the psychological?

All these studies are of course especially interesting when it comes to constructing artificial faces, like in virtual spaces or for humanoid robots (e.g. here) (see also this old randform post).

Similar questions were also studied in a nice future face exhibition at the science museum in London organized by the Wellcome Trust.

An analytical method is to start with proportions, where there are some prominent old works, like Leonardo’s or Duerer’s studies, leading last not least to e.g. studies in artificial intelligence which for example link “beautiful” proportions to the low complexity of the corresponding encoded information.

These questions are a bit related to the question of how interfaces are related to processes of computing, also if one doesnt just think of robots. It concerns also questions of Human Computer Interactions as we saw above and finally Human Computer Human Interactions, which were thematized e.g. in our work seidesein.

update June 14th, 2017: according to nytimes (original article) researchers from caltech have apparently found the way how macaque monkeys encode images of faces in their brain. The article describes that the patterns of how 200 brain cells were firing could be translated into deviations form a “standard face” along certain axes, which span 50 dimensions, from the nytimes:

“The tuning of each face cell is to a combination of facial dimensions, a holistic system that explains why when someone shaves off his mustache, his friends may not notice for a while. Some 50 such dimensions are required to identify a face, the Caltech team reports.

These dimensions create a mental “face space” in which an infinite number of faces can be recognized. There is probably an average face, or something like it, at the origin, and the brain measures the deviation from this base.

A newly encountered face might lie five units away from the average face in one dimension, seven units in another, and so forth. Each face cell reads the combined vector of about six of these dimensions. The signals from 200 face cells altogether serve to uniquely identify a face.”

If I haven’t overseen something the article though doesn’t say, how or whether that “standard face” is connected to “simple face dimensions”, i.e. “easy to compute facial features” as mentioned above. By very briefly browsing/ diagonally reading in the original article I understand that the researchers pinpointed 400 facial features, 200 for shape and 200 for appearances and then looked in which directions those move for a set of faces, then extracted those “move directions” via a PCA and then noticed that specific cells first reacted mostly only to 6 dimensions and secondly that the firing rate varied, which apparently allowed to encode specific faces in a linear fashion in this 50 dimensional space. I couldn’t find out in this few minutes reading whether the authors give any indication on how e.g. the “shape points” (figure 1a in the image panel) move when moving along one of the 25 shape dimensions, i.e. in particular wether some kind of Kolmogorov complexity features could be extracted (as it seems to be done here) or not.

It is also unclear to me what these new findings mean for the “toilet paper wasting generation” in China.

By the way in this context I would like to link to our art work CloneGiz.

screenshot of monoface, mono‘s happy new year flash greeting card (slightly modified in order to make it more look like me..:)).

via cuartoderecha.

Tha application monoface mixes up face parts of people from mono. The application is reminicent to a popular game on paper, where various people have to draw body parts in order to design a complete creature.

cloneGiz (daytar 3/2004) also “mixes parts of faces”. It has a mathematical “selection layer”, means you do not select by picking with your mouse as in monoface but you have to write a formula, which produces the outcome.

The main idea of cloneGiz was not to mix funny faces (although you can do this) but to ask the question of how one can controll the final product (the mixed face) by changing the mathematical formula. It links the mathematical language to a visual “representation”. This project was also made in order to illustrate an ongoing project of linking “objects” to “mathematical code” (sofar via the string rewriter jSymbol).

In mathematics counter-clockwise rotations are considered “positive”. (more…)

Just a short note: Science voted Perelman’s work (from 2001/2 — it took the math comunity quite a while to work through his papers :-)) breakthrough of the year.

I attached a little silvery chain to a mathematical curve template in order to illustrate the difference between a catenary and a parabola.

Then I put the curve template (also called french curve, also on google images) into the dishwasher in order to get it upright for an orderly scientific photo. The forks and knives in the image are disturbing, but well one has to live with them for now.

The experiment displays a line which is described by a hanging chain.

If one compares it with the line of a parabola given by the function f(x)=x^2 (on the curve template) then one sees that the two lines are only almost fitting and in fact a better mathematical model for a hanging chain is the catenary, given by the cosh function f(x)=cosh(x), i.e. the hyperbolic cosine (not to be confused with a hyperbola). The model is constructed via the physical picture of many chain links taking each other by the hand which are subject to a constant gravitational force, or in other words: if you cut the chain at various points then the repective chunks would fall down separately.

There are very beautiful partially interactive links on the Wikipedia catenary site, which illustrate this.

The above observation is already described in this old mathematical physics paper from the early 17th century, which is beautifully accessible thanks to the work of people who had fun with mathematics. There is also a tender drawing of down parabolas.

-> about parabolic flight