character recognition linked to physics engines

Optical character recognition, usually abbreviated to OCR, is computer software designed to translate images of handwritten or typewritten text (usually captured by a scanner or a digitizer) into machine processable text. OCR is e.g. commercially used in PDA’s However “handwritten” characters do not need to be constrained to letters or simple symbols but could also be more complex shapes, if necessary also in 3D. The recognition of such shapes can also be interpreted as gesture recognition.

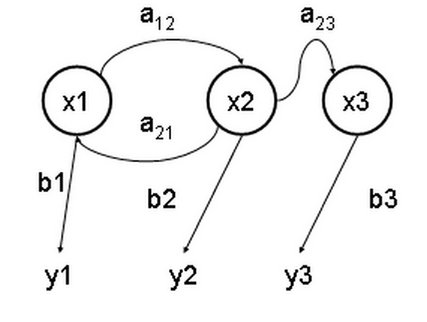

Any recognition of human input can be used to inititiate some action. If the human input is a drawn image or a character then usually the spatial components of the gesture (i.e. the actual representation of the symbol) and the order of how it is drawn are considered to be important. In OCR (e.g. with letters) that means that on one hand one has to recognize the corresponding letter as a shape and secondly the order of how the letters are drawn, as these give valuable information about what shape the letter may have. Or in other words if you use the English language then the combination “the” is more likely then the combination “tbe”. So syntax and context needs often to be used for recognition. But what if the generated action feeds back on the context?

A very nice example of character recognition in conjunction with physics engines is explained on a video on youtube by Professor Randall Davis, which I found via cuartoderecha. Here the gestures, which are drawn on a 2D board, are read in via an e-board (like e.g. this one), recognized as shapes (where probably also the order of how the shape was drawn is getting captured) , transformed into vector graphics and then linked to a small physics engine in the gusto of the incredible machine.

In some sense, input in video games can be considered as gesture recognition as well. however here the gestural input usually produces a physical reaction which is automatically inducing reactions on the gesture itself. Thats what I meant with “if the (physical) content feeds back”. This feature appears in slow form also in the above video, when Professor Davis changes his ball machine. I mentioned this mechanism also in my paper for the NMI 2006 (which I haven’t yet translated….)

An also very nice and historical example is the previsualization of a galaxy collision choreography for the Cosmic Voyage IMAX film. This previsualization was done with the virtual director created by Donna Cox, Bob Patterson and Marcus Thiebaux and further developped by Cox, Patterson and Stuart Levy. Stuart Levy was once also doing a little visiting programming the math group at the TU.

October 20th, 2006 at 5:49 pm

here is a small flash game that somewhat fits:

http://www.deviantart.com/deviation/40255643/